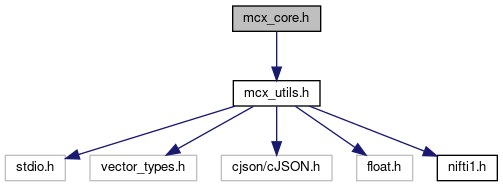

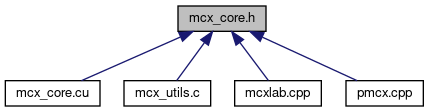

MCX GPU kernel header file. More...

#include "mcx_utils.h"

Go to the source code of this file.

Macros | |

| #define | ABS(a) ((a)<0?-(a):(a)) |

| #define | MAX_ACCUM 1000.f |

| #define | ROULETTE_SIZE 10.f |

| #define | GPUDEBUG(x) |

Typedefs | |

| typedef float4 | MCXpos |

| typedef unsigned char | uchar |

Functions | |

| struct | __align__ (16) KernelParams |

| Simulation constant parameters stored in the constant memory. More... | |

| void | mcx_run_simulation (Config *cfg, GPUInfo *gpu) |

| Master host code for the MCX simulation kernel (!!!Important!!!) More... | |

| int | mcx_list_gpu (Config *cfg, GPUInfo **info) |

| Utility function to query GPU info and set active GPU. More... | |

Variables | |

| SVox | |

| Stokes | |

| MCXsp | |

| MCXdir | |

| MCXtime | |

| Gpos | |

| Gdir | |

| Glen | |

| Gprop | |

| MCXParam | |

Detailed Description

MCX GPU kernel header file.

Macro Definition Documentation

◆ ABS

| #define ABS | ( | a | ) | ((a)<0?-(a):(a)) |

macro to take absolute value

◆ GPUDEBUG

| #define GPUDEBUG | ( | x | ) |

printing commands are ignored if MCX_DEBUG macro is not defined

◆ MAX_ACCUM

| #define MAX_ACCUM 1000.f |

To avoid round-off errors when adding very small number to large number, we split the GPU accumulation array into 2 copies, if the first copy exceeds this limit, we add the first copy to the 2nd copy, and clear the first one. See https://github.com/fangq/mcx/issues/41 for details

◆ ROULETTE_SIZE

| #define ROULETTE_SIZE 10.f |

Russian Roulette size

Typedef Documentation

◆ MCXpos

Function Documentation

◆ __align__()

| struct __align__ | ( | 16 | ) |

Simulation constant parameters stored in the constant memory.

This struct stores all constants used in the simulation.

< flag if a photon is inside a mixed voxel

< label of the tissue type at -nv direction (lower region)

< label of the tissue type at +nv direction (upper region)

< flag if a photon is in the upper region

< total light intensity: IH + IV

< IH - IV

< I(+pi/4) - I(-pi/4)

< IR - IL

< indicator of tissue type under split-voxel MC (SVMC) mode

< reference point of the intra-voxel interface

< normal vector of the intra-voxel interafece (direction: lower -> upper)

< directional vector of the photon, x-component

< directional vector of the photon, y-component

< directional vector of the photon, z-component

< total number of scattering events

< remaining unit-less scattering length = length * scattering coeff

< photon elapse time, unit=s

< photon total pathlength inside a voxel, in grid unit

< number of completed photons

< volume voxel size in grid unit, always 1,1,1

< minimum step of the 3, always 1

< starting time of the current time gate, unit is s

< end time of the current time gate, unit is s

< maximum time gate length, same as cfg.tend

< 1/(speed of light in the vacuum)

< flag if mcx outputs fluence volume

< flag if mcx performs reflection calculations

< flag if mcx perform reflection calculations at internal boundaries

< flag if mcx outputs detected photon partial length data

< reciprocal of the step size

< initial position vector, for pencil beam

< initial directon vector, for pencil beam

< initial stokes parameters, for polarized photon simulation

< maximum index in x/y/z directions for out-of-bound tests

< maximum index used to convert x/y/z to 1D array index

< 3D coordinates of one diagonal of the cached region (obsolete)

< 3D coordinates of the other diagonal of the cached region (obsolete)

< stride for cachebox data acess (obsolete)

< threshold of weight to trigger Russian roulette

< square of the radius within which the data is cached (obsolete)

< time steps for tMCimg like weight accummulation (obsolete)

< type of the source

< source parameters set 1

< source parameters set 2

< flag if the time-of-flight in the background is counted

< max number of detected photons

< max number of media labels

< max number of detectors

< max number of media labels for polarized light

< max number of time gates

< pre-computed 1D index of the photon at launch for pencil/isotropic beams

< pre-computed media index of the photon at launch for pencil/isotropic beams

< whether atomic operations are used

< max steps that photon can travel in the background before entering non-zero voxels

< flag if one need to save the detected photon seeds for replay

< flag if one need to save diffuse reflectance data in the 0-voxel layer next to the boundary

< 0 do not perform specular reflection at launch, 1 do specular reflection

< offset of the seed, not used

< RNG seed passted from the host

< Type of output to be accummulated

< how many photons to be simulated in a thread

< how many threads need to simulate 1 more photon above the basic load (threadphoton)

< use an approximated stepping approach, not used

< debug flags

< detected photon save flags

< length of buffer per detected photon

< per-medium detected photon data length

< photon-sharing buffer offset

< format of the media buffer

< max number of positions to be saved to save photon trajectory when -D M is used

< how many scattering events after which mus/g can be approximated by mus'

< is the domain a 2D slice?

< select which detector to replay, 0 for all, -1 save all separately

< total number of source patterns

< number of samples for inverse-cdf, will be added by 2 to include -1 and 1 on the two ends

< modulation angular frequency (2*pi*f), in rad/s, for FD/RF replay

< boundary conditions

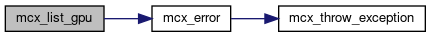

◆ mcx_list_gpu()

Utility function to query GPU info and set active GPU.

This function query and list all available GPUs on the system and print their parameters. This is used when -L or -I is used.

- Parameters

-

[in,out] cfg the simulation configuration structure [out] info the GPU information structure

◆ mcx_run_simulation()

Master host code for the MCX simulation kernel (!!!Important!!!)

This function is the master host code for the MCX kernel. It is responsible for initializing all GPU variables, copy data from host to GPU, launch the kernel for simulation, wait for competion, and retrieve the results.

- Parameters

-

[in,out] cfg the simulation configuration structure [in] gpu the GPU information structure

p0 - initial photon positions for pencil/isotropic/cone beams, used to initialize {p={x,y,z,w}} state in the GPU kernel

c0 - initial photon direction state, used to initialize {MCXdir v={vx,vy,vz,}} state in the GPU kernel

stokesvec - initial photon polarization state, described by Stokes Vector

gpuphoton - number of photons to be simulated per thread, determined by total workload and thread number

photoncount - number of completed photons returned by all thread, output

mcgrid - GPU grid size, only use 1D grid, used when launching the kernel in cuda <<<>>> operator

mcblock - GPU block size, only use 1D block, used when launching the kernel in cuda <<<>>> operator

sharedbuf - shared memory buffer length to be requested, used when launching the kernel in cuda <<<>>> operator

dimxyz - output volume variable field voxel count, Nx*Ny*Nz*Ns where Ns=cfg.srcnum is the pattern number for photon sharing

media - input volume representing the simulation domain, format specified in cfg.mediaformat, read-only

field - output volume to store GPU computed fluence, length is dimxyz

rfimag - imaginary part of the RF Jacobian, length is dimxyz

Ppos - per-thread photon state initialization host buffers

Pseed - per-thread RNG seed initialization host buffers

Pdet - output host buffer to store detected photon partial-path and other per-photon data

energy - output host buffers to accummulate total launched/absorbed energy per thread, needed for normalization

srcpw, energytot, energyabs - output host buffers to accummulate total launched/absorbed energy per pattern in photon sharing, needed for normalization of multi-pattern simulations

seeddata - output buffer to store RNG initial seeds for each detected photon for replay

detected - total number of detected photons, output

progress - pinned-memory variable as the progress bar during simulation, updated in GPU and visible from host

all pointers start with g___ are the corresponding GPU buffers to read/write host variables defined above

|-----------------------------------------------> hostdetreclen <--------------------------------------|

|------------------------> partialdata <-------------------|

host detected photon buffer: detid (1), partial_scat (#media), partial_path (#media), momontum (#media), p_exit (3), v_exit(3), w0 (1) |———————————————> w0offset <-————————————||<–— w0 (#srcnum) –—>||<- RF replay (2)->| gpu detected photon buffer: partial_scat (#media), partial_path (#media), momontum (#media), E_escape (1), E_launch (1), w0 (1), w0_photonsharing (#srcnum) cos(w*T),sin(w*T)

param - constants to be used in the GPU, copied to GPU as gcfg, stored in the constant memory

Start multiple CPU threads using OpenMP, one thread for each GPU device to run simultaneously, threadid returns the current thread ID

Use threadid and cfg.deviceid, the compressed list of active GPU devices, to get the desired GPU ID for this thread

Activate the corresponding GPU device

Use the specified GPU's parameters, stored in gpu[gpuid] to determine the maximum time gates that it can hold

Updating host simulation configuration cfg, only allow the master thread to modify cfg, others are read-only

If domain is 2D, the initial photon launch direction in the singleton dimension is expected to be 0

If autopilot mode is not used, the thread and block sizes are determined by user input from cfg.nthread and cfg.nblocksize

If total thread number is not integer multiples of block size, round it to the largest block size multiple

If cfg.respin is positive, the output data have to be accummulated, so we use a double-buffer to retrieve and then accummulate

Master thread computes total workloads, specified by users (or equally by default) for all active devices, stored as cfg.workload[gpuid]

Now we can determine how many photons to be simualated by multiplying the total photon by the relative ratio of per-device workload divided by the total workload

Once per-thread photon number param.threadphoton is known, we distribute the remaiders, if any, to a subset of threads, one extra photon per thread, so we can get the exact total photon number

Total time gate number is computed

Here we determine if the GPU memory of the current device can store all time gates, if not, disabling normalization

Here we decide the total output buffer, field's length. it is Nx*Ny*Nz*Nt*Ns

A 1D grid is determined by the total thread number and block size

A 1D block is determined by the user specified block size, or by default, 64, determined emperically to get best performance

If "-D R" is used, we launch a special RNG testing kernel to retrieve random numbers from RNG and test for randomness

Allocate all host buffers to store input or output data

Ppos: host buffer for initial photon position+weight

Pdir: host buffer for initial photon direction

Plen: host buffer for initial additional photon states

energy: host buffer for retrieving total launched and escaped energy of each thread

Pdet: host buffer for retrieving all detected photon information

Pseed: RNG seed for each thread in non-replay mode, or

Pseed: RNG seeds for photon replay in GPU threads

Allocate all GPU buffers to store input or output data

Allocate pinned memory variable, progress, for real-time update during kernel run-time

Allocate and copy data needed for photon replay, the needed variables include gPseed per-photon seed to be replayed greplayw per-photon initial weight greplaytof per-photon time-of-flight time in s greplaydetid per-photon index for each replayed photon

Allocate and copy source pattern buffer for 2D and 3D pattern sources

Saving detected photon is enabled by default, but in case if a user disabled this feature, a warning is printed

Pre-compute array dimension strides to move in +-x/y/z dimension quickly in the GPU, stored in the constant memory

Inside the GPU kernel, volume is always assumbed to be col-major (like those generated by MATLAB or FORTRAN)

Additional constants to avoid repeated computation inside GPU

Get ready to start GPU simulation here, clock is now ticking ...

Copy all host buffers to the GPU

Copy constants to the constant memory on the GPU

If one has to simulate a lot of time gates, using the GPU global memory requires extra caution. If the total global memory is bigger than the total memory to save all the snapshots, i.e. size(field)*(tend-tstart)/tstep, one simply sets gpu[gpuid].maxgate to the total gate number; this will run GPU kernel once. If the required memory is bigger than the video memory, set gpu[gpuid].maxgate to a number which fits, and the snapshot will be saved with an increment of gpu[gpuid].maxgate snapshots. In this case, the later simulations will restart from photon launching and exhibit redundancies.

The calculation of the energy conservation will only reflect the last simulation.

Outer loop: loop over each time-gate-group, determined by the capacity of the global memory to hold the output data, in most cases, totalgates is 1

Determine the start and end time of the current time-gate-group

Copy param to the constant memory variable gcfg

Inner loop: loop over total number of repetitions specified by cfg.respin, results will be accumulated to field

Each repetition, we have to reset the output buffers, including gfield and gPdet

Start the clock for GPU-kernel only run-time here

Determine template constants for compilers to build specialized binary instances to reduce branching and thread-divergence. If not using template, the performance can take a 20% drop.

ispencil: template constant, if 1, launch photon code is dramatically simplified

isref: template constant, if 1, perform boundary reflection, if 0, total-absorbion boundary, can simplify kernel

issvmc: template constant, if 1, consider the input volume containing split-voxel data, see Yan2020 for details

ispolarized: template constant, if 1, perform polarized light simulations, currently only supports label-based media

Enable reflection flag when c or m flags are used in the cfg.bc boundary condition flags

Launch GPU kernel using template constants. Here, the compiler will create 2^4=16 individually compiled kernel PTX binaries for each combination of template variables. This creates bigger binary and slower compilation time, but brings up to 20%-30% speed improvement on certain simulations.

By now, the GPU kernel has been launched asynchronously, the master thread on the host starts reading a pinned memory variable, gprogress, to realtimely read the completed photon count updated inside the GPU kernel while it is running.

host variable progress is pinned with the GPU variable gprogress, and can be updated by the GPU kernel from the device. We can read this variable to see how many photons are simulated.

Here we use the below formula to compute the 0-100% completion ratio. Only half of the threads updates the progress, and each thread only update the counter 5 times at 0%/25%/50%/75%/100% progress to minimize overhead while still providing a smooth progress bar.

By calling cudaDeviceSynchronize, the host thread now waits for the completion of the kernel, then start retrieving all GPU output data

Here, the GPU kernel is completely executed and returned

now we can estimate and print the GPU-kernel-only runtime

If the GPU kernel crashed or terminated by error during execution, we need to capture it by calling cudaGetLastError and terminate mcx if error happens

Now, we start retrieving all output variables, and copy those to the corresponding host buffers

photoncount returns the actual completely simulated photons returned by GPU threads, no longer used

If '-D M' is specified, we retrieve photon trajectory data and store those to cfg.exportdebugdata and cfg.debugdatalen

If photon detection is enabled and detectors are defined, we retrieve partial-path length data, among others, to cfg.exportdetected and detected

Accumulate volumetric fluence from all threads/devices

If double-precision is used for output, we do not need two buffers; however, by default, we use single-precision output, we need to copy and accumulate two separate floating-point buffers to minimize round-off errors near the source

If respin is used, each repeatition is accumulated to the 2nd half of the buffer

Here is the end of the inner-loop (respin)

If respin is used, copy the accumulated buffer in the 2nd half to the first half

Retrieve accumulated total launched and residual energy from each thread

For MATLAB mex file, the data is copied to a pre-allocated buffer cfg->export* as a return variable

Here is the end of the outer-loop, over time-gate groups

Let the master thread to deal with the normalization and file IO First, if multi-pattern simulation, i.e. photon sharing, is used, we normalize each pattern first

Now we normalize the fluence so that the default output is fluence rate in joule/(s*mm^2) generated by a unitary source (1 joule total).

The raw output directly from GPU is the accumulated energy-loss per photon moving step in joule when cfg.outputtype='fluence', or energy-loss multiplied by mua (1/mm) per voxel (joule/mm) when cfg.outputtype='flux' (default).

If output is flux (J/(s*mm^2), default), raw data (joule*mm) is multiplied by (1/(Nphoton*Vvox*dt)) If output is fluence (J/mm^2), raw data (joule*mm) is multiplied by (1/(Nphoton*Vvox))

If output is energy (joule), raw data is simply multiplied by 1/Nphoton

In photon sharing mode, where multiple pattern sources are simulated, each solution is normalized separately

If not running as a mex file, we need to save volumetric output data, if enabled, as a file, with suffix specifed by cfg.outputformat (mc2,nii, or .jdat or .jbat)

If not running as a mex file, we need to save detected photon data, if enabled, as a file, either as a .mch file, or a .jdat/.jbat file

If not running as a mex file, we need to save photon trajectory data, if enabled, as a file, either as a .mct file, or a .jdat/.jbat file

Copying GPU photon states back to host as Ppos, Pdir and Plen for debugging purpose is depreciated

Report simulation summary, total energy here equals total simulated photons+unfinished photons for all threads

Simulation is complete, now we need clear up all GPU memory buffers

The below call in theory is not needed, but it ensures the device is freed for other programs, especially on Windows

Lastly, free all host buffers, the simulation is complete.

1.8.13

1.8.13